Random Walks#

Author:

Date:

Time spent on this assignment:

Remember to execute this cell to load numpy and pylab.

Exercise 1: Unbiased Random Walks#

In this exercise we are going to start by modeling a random walk. A random walk starts at some location \(x_0\) at a time \(t=0\) and then continues to take random steps at each next unit in time. Letting, \(x_0=0\), we can model future steps as

where \(\delta\) is a random step. Lots of different physical processes could be modelled by random walks:

Dust in the air (or pollen in water): One of the earliest use of random walks was by Einstein who explained an observation of Robert Brown (see here ) who saw grains of pollen suspended in water bouncing randomly. This random bouncing comes from large numbers of invisible atoms colliding with the grains of pollen causing them to randomly jiggle. Einstein analyzed this effect and argued that it was strong evidence for the existence of atoms despite not being able to see them.

Coin Flips: Consider flipping a coin many times treating heads as +1 and tails as -1. We can think of the coin flips as a random walk and the total sum as the surplus of heads over tails.

A person randomly taking steps left and right with each step.

Each of these cases can be represented by a random walk although we might have to use a different step for \(\delta\). For example, in the case of a coin-flip we should let \(\delta\) be either +1 or -1 whereas for a person walking we might let \(\delta\) by selected continuously from some range. For most of this exercise, let us use

where \(\sigma\) is a number which represents an effective step-size and \(N(0,1)\) is a gaussian with mean 0 and standard deviation 1 generated by np.random.randn()

Notice that for each of these walks, if we were to watch just one walk - e.g. a single person moving back and forth or a single pollen - it would look pretty random. It’s only if we look at where the particle is at a moment in time over the distribution of many walks, can we say something quantitative about where we think the particle (or coin sum or person) might be.

a. Simulating a random walk#

Let’s start by writing code which simulates a random walk. Your goal is to write a function

def RandomWalk(NumSteps, sigma):

# do stuff

return np.array(myWalk)

which takes a step-size sigma and a number of steps and returns a numpy array that gives the walk at each step. Make sure that your first location in your myWalk array is the origin.

Generate 10 different random walks each of 1000 steps with step-sizes of \(\sigma=6\)

walks=[]

for walk in range(0,10):

walks.append(RandomWalk(1000,6))

walks=np.array(walks)

Now that we have these 10 random walks, we can visualize them in various ways:

Plot the location \(x\) as a function of the time-step \(t\). Notice how these walks all start at the origin and be spread out.

Animate the walks by calling

Animate1D(walks). This may take about 30 seconds to run and will show you your 10 individual walks wandering around.

### Answer Here

b. Random Walk Averages#

Since each random walk is different, it is useful to look at properties of the distribution over walks. We are going to start by computing averages over a large number of walks. The properites we will focus on is

(1) The average \(\langle x_t\rangle\) over the walks at time \(t\).

(2) The mean absolute deviation (MAD) \(\langle |x_t| \rangle\) over the walks at time \(t\).

(3) The standard deviations \(\sqrt{\langle x_t^2 \rangle - \langle x_t \rangle^2}\) over the walks.

For a random walk of 1000 steps each of these three quantities should be arrays of lengths 1001 (because you start at zero).

To get good statistics, we will average the above quantities over 10,000 walks. Depending on how you implement this, 1001\(\times\)10000 floating point numbers might take up too much memory, so I’d recommend instead keeping a numpy array of length 10,000, stepping them all together, and taking the averages 1-3 at each timestep. (that is, invert the loops from above)

Make a plot of the average position, mean absolute deviation, and standard deviation all on the same plot. Things of note:

The average is essentially zero. With a little thought you can convince yourself that (being statistically equal to) zero is the expected answer. Since it’s just as likely that you go left as right, on average you don’t go anywhere.

If you were to move in the +x direction every time, you’d expect the standard deviation to increase linearly with time. You should see a different dependence for a random walk.

The average distance of the distribution over walks is significantly slower than linear, as might be expected since half the time you’re heading back toward the origin. In fact it goes as \(\sqrt{t}\). This is called diffusive motion. Verify that your standard deviation scales this way by making a log-log plot of the standard deviation.

On a log-log plot the standard deviation is linear (which means that \(\sigma \propto t^\alpha\)). Don’t graph the t=0 point at the origin.

Find the value of \(\alpha\) by computing the slope of your (log-log) plot using

np.polyfit. Show it goes as \(t^{1/2}\)

### Answer Here

c. The Full Distribution#

So far we’ve been looking at some summary statistics as of the distribution such as the average and standard deviation. Now, we’d like to look at the full distribution over walks.

We will start by generating the 10 walks (don’t plot them) that we generated earlier and this time animating them with Animate1D(walks,make_hist=True). This time the animation of the particles bouncing around will be generated with a histogram on top which gives the full distribution of the walks. At the beginning you should see the histogram has all 10 particles in one places. During the animiation, it will quickly end up each bin has only one particle in it although occassionally you will see 2 (and maybe 3) particles per bin. Go ahead and animate it.

### Answer Here

Now let’s do this again but instead of just 10 walks, let’s do it with 1000 walks. Here the histogram is a better representation of the distribution over many walks. As a function of time you should see something that looks like a Gaussian spreading out.

### Answer Here

Now instead of animating this, we’d like to plot a histogram of the distribution after 2 steps, 10 steps, 100 steps, and 500 steps.

To do this, you want to plot a histogram of your distribution - i.e. something like

plt.hist(walks_step100,bins=50,density=True,alpha=0.5,label='t=200')

Do this for 100,000 walks. Use your large number of walkers method from above to plot the histogram.

### Answer Here

You should see the distribution always looks gaussian with the width of the gaussian growing as the number of steps grow. Notice that from these distributions, we can also compute the various quantities - i.e \(\sigma_{100} = \sqrt{\langle x_{100}^2 \rangle - \langle x_{100} \rangle^2}\) that we compute above. We can take the output of

values,edges,_=plt.hist(walks_step100,bins=50,density=True)

delta_x=edges[1]-edges[0]

plt.close()

center=(edges[:-1]+edges[1:])/2.

which gives us the (normalized) values of the histogram and the centers of the bins. From the variables values, delta_x and center compute \(\sigma_{100}\) and verify that it is (essentially) the same value that you discovered above. To do this you will need to take an integral. Think about how to get that integral from this information.

### Answer Here

Notice that both from the animation and from the histograms you’ve produced below, it seems pretty clear that while each random walk is, well random, there is something deterministic about the distribution as it changes in time. In the next exercise, we will try to model the deterministic diffusion of the distribution as a function of time.

Exercise 2: Modeling the diffusion deterministically#

a. Representing the initial distribution#

In this exercise, we’d like to deterministically model how the distribution over random walks changes in time. What this means is that we want to have some equation of motion that starts with the histogram at step \(t=1\) and then generates the histogram at other time step \(t\). In the dynamics section, we stored the position and velocity of a planet at a moment in time. Now, we have to store a vector which stores the entire histogram or distribution at a moment in time.

Start with a vector in the initial condition \(\rho(t=1)=\exp[-x^2/2\sigma^2]\) where \(\sigma=6\) which we can represent on a grid with \(dx=1\) as

x0 = 400

dx = 1.0

xs = np.arange(-x0, x0, dx)

sigma = 6

rho = np.exp(-xs**2 / (2 * sigma**2))

Go ahead and plot this as

plt.plot(xs, rho/(np.sum(rho)*dx))

(we scale it so that the integral of our density is 1.0 like density=True does when we generate a histogram).

### Answer Here

b. Time evolving the distribution#

Now we need an equation of motion which tells us how to change the distribution. Stochastic random walks are represented by the diffusion equation:

Here \(D=a^2/2\) is the diffusion constant where \(a\) is our step size (in this case 6).

To time-step with this differential equation, we need to figure out how to take \(\rho\) and apply \(\frac{\partial^2 \rho}{\partial x^2}\). We will assume that \(\rho=0\) at the boundary at all time so we don’t have to worry about the boundary conditions. To apply this partial derivative what we should do is use the finite-difference stencil that

Again we have that \(dx=1\) and we will use \(dt=dx^2/100\). Using this, write a function

def Step(rho,D,dt,dx):

# compute d^2rho/dx^2

# put in the constant and step

return rho

which takes the vector representing \(\rho\) and moves it forward \(dt\). (It may be helpful to use np.roll(rho,1) and np.roll(rho,-1))

Compare the distribution that you get at times \(t=2.0, t=10.0\), and \(t=30.0\) against the histograms you got from the random walks (remembering to also scale the density of the random walks histograms like plt.hist(mywalk_step2,bins=50,density=True).

### Answer Here

Exercise 3: Two Dimensional Random Walks#

a. Simulating a Random Walk#

Let us now work with a two-dimensional random walk. Starting at \(x=(0,0)\) generate 5 two-dimensional gaussian random walks with a \(\sigma=6\) each of 5000 steps. Plot \(y\) vs \(x\) for these 5 walks.

### Answer Here

b. Self Similarity#

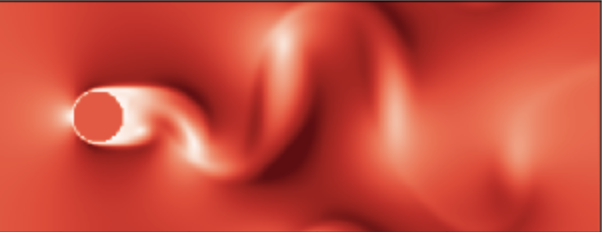

Brownian random walks are self-similar. As you zoom into pieces of the figure, it looks qualitatively the same on all scales. Make a random walk of 128,000 steps. Then plot (on separate plots)

the random walk

the first half of the random walk (multiplying the \(x\) and \(y\) by 2)

the first quarter of the random walk (multiplying the \(x\) and \(y\) by 4)

the first eighth of the random walk (multiplying the \(x\) and \(y\) by 8)

the first 1/16 of the random walk (multiplying the \(x\) and \(y\) by 16)

the first 1/32 of the random walk (multiplying the \(x\) and \(y\) by 32)

Notice that these plots all look qualitatively similar. This is what I get:

### Answer Here

c. Standard Deviation#

Like in the 1D case, we expect the average \(\langle x_k \rangle\) to be zero. Let’s just go ahead and plot the standard deviation

for 1000 copies of the gaussian plot.

You should obtain a similar curve to that obtained in the one-dimensional case, but increased somewhat in amplitude. Even in two-dimensions, the displacement goes with the square root of time. Show this by also plotting it on a log-log plot and fitting the slope.

It turns out that you also see this behavior in three dimensions. In general, the standard deviation always scales as the square root of time for any physical system undergoing diffusive motion.

d. Animation (extra credit: 5 points)#

Animate the two-dimensional random walk. You may find the animation code from previous exercise helpful.

Exercise 3: Diffusion Limited Aggregation#

In the previous exercises we learned some properties of random walks. It turns out that some crystal formation can be modeled accurately using random walks. You can see some beautiful pictures of this here

The simplest model of these systems is the following: A single particle (be that an atom or a cluster of atoms) is initially stuck to a substrate. Particles in the environment randomly move around until they find that first particle, at which point they stick to it and stop moving.

a. DLA onto a core point at the origin#

In DLA, we do the following:

Start with a particle at some fixed point (say the center of a box). This is the first stuck particle.

Repeat the following:

Add a new particle someplace on the boundary (anywhere on the four walls).

Move this particle using a discrete random walk (left, right, up, down). If it tries to move in the direction of one of the walls, just reject that move.

Once it is adjacent to (up or down or left or right) a currently stuck particle, it becomes a stuck particle and you create a new particle.

To accomplish this, I kept a two-dimensional numpy array which kept track of the location of the stuck particles and the currently moving particle.

At the end, plot the location of your stuck particles. To do this, use

plt.matshow(A)

where A is your numpy grid.

Start with a box of size \(100 \times 100\).

Use 1000 different particles.

This simulation is a little slow. On colab, it takes me approximately 2 minutes. For testing you might want to turn down the number of different particles that you use.

It also also interesting to see the picture when you have a larger box (\(200 \times 200\)) but this is much slower (and not required as part of the assignment).

### Answer Here

### Answer Here

b. DLA onto a surface (extra credit: 5 points)#

In the previous section you did DLA onto a core point. For crystal growth like MBE, there is an environment of particles which stick to a surface at the bottom (the substrate). Modify your DLA to come in at the top and stick either at the bottom or if they hit any previously stuck point. Plot the resulting figures.

### Answer Here

### Answer Here

Exercise 4: Symmetry Restoration#

a. One dimensional Discrete Walks#

So far we’ve been doing random walks with gaussian steps. We would like to see what changes if instead we did discrete walks - i.e. at each step we are going to walk either left exactly 6 units or right exactly 6 units.

Write code to do this random walk and then print the histogram on separate plots at

2 steps (with the gaussian histogram on top of it)

100 steps (with the gaussian histogram on top of it).

i.e. for the 2 step histogram I wrote

plt.hist(locs_discrete[:,2],bins=10)

plt.hist(locs[:,2],bins=10,alpha=0.3)

plt.show()

For the 2 step histogram you should see a qualitative difference. But once you get to 100 steps, it seems that there is no dependence on the distribution for either the discrete or gaussian steps. This is a form of universality (and something we will see even more starkly in the two-dimensional case below).

### Answer Here

b. Rotational Invariance in 2D#

Now we are going to show that in the 2D case, the discrete walks restore the rotational invariance of the gaussian walk. By this we mean that the distribution does not depend on the angle we look at.

To do this, plot the location of the 2000 2d coordinates at t=1000 steps.

Do this for both the discrete and Gaussian (continous) walks.

You should see that

(1) They look the same and

(2) More interestingly, the discrete case looks rotationally invariant (like a circle) even though it can only go up, down, left, right. This is an emergent rotational symmetry.

### Answer Here

Acknowledgements

Problems 1,2,3,4: Bryan Clark (original)

A different but related verision of problems 1(ab) and 3(ac) existed in an earlier version by George Gollin and Ryan Levy and Eli Chertkov

A similar version of problem 4 exists in James Sethna’s statistical mechanics textbook.